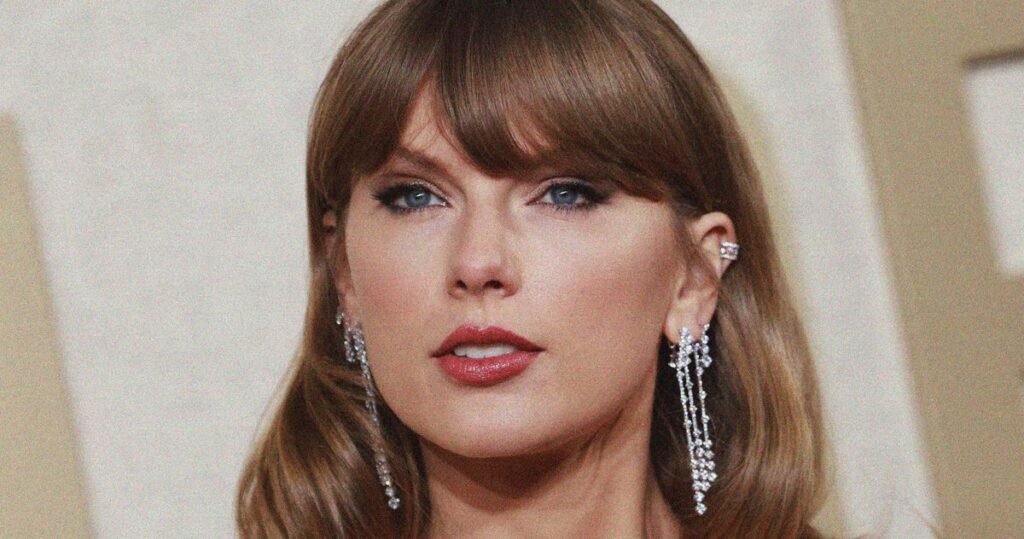

Photo: MICHAEL TRAN/AFP via Getty Images

Throughout Taylor Swift’s decades-long career, her status as one of the world’s most influential artists has not completely shielded her from non-consensual sexual advances and grotesque invasions of privacy. In 2017, the singer won a lawsuit against a DJ who accused her of groping her body. At a handshake, they ultimately secured a symbolic $1 in damages. In 2019, she accused Kanye West of “revenge porn” after his “Famous” music video featured a wax figure of her naked in bed with him. It’s been about seven years since singer-songwriter Father John Misty. raised eyebrows A graphic deepfake image of the pop singer went viral with the line, “I let Taylor Swift sleep every night inside my Oculus Rift,” predicting a future in which advanced technology will be used to humiliate and expose celebrities. Here’s everything you need to know.

This week, sexually explicit fake photos of Swift began circulating online, renewing concerns about the ethics of deepfake technology and its role in online harassment of women.some sources suggest The image comes from a Telegram group.others believe they have tracked Photo published in tabloid newspaper celebrity jihad, which swift reportedly He is already considering legal action after announcing that her nudes had been “leaked” in 2011. Her images gained further attention after being spread on social media platforms such as X, Reddit, and Instagram. One of her most talked about photos of her was viewed over 45 million times and she remained viewed for 17 hours until X deactivated her account for that photo. according to To The Verge.

Deepfakes are a violation of X content policyIt says, “You may not share synthetic, manipulated, or out-of-context media that is likely to deceive, confuse, or harm people.” However, since Elon Musk took control of the site in 2022, it has struggled to manage content due to fewer staff and relaxed rules.Last year, Ella Irwin, head of trust and safety at Company X, resigned; Quote Disagreement over principles: “It was important to me that there was an understanding that hate speech, such as violent graphic content, would not be promoted, promoted or amplified,” she said.

X suspended several accounts, but the images continued to spread, prompting Swift’s fans to take matters into their own hands. They began flooding X with photos and videos of Swift’s concert performances to cover up the explicit images.they too cooperated To report the account behind the photo.

Rumors have surfaced that Swift is considering legal action.According to a source close to the singer Said of daily mail: “While we are currently deciding whether to pursue legal action, one thing is clear: These AI-generated fake images are abusive, offensive, exploitative, and cannot be taken without Taylor’s consent or knowledge.” Swift’s publicist Tree Payne did not respond to The Cut’s request for comment.

As deepfake technology becomes more widely available, the risk of misuse increases, reinforcing the urgency for reform. Last May, Democratic Representative Joe Morrell introduced the Intimate Image Deepfake Prevention Act, making the creation of such images a federal crime. On Thursday he I have written on X: “The prevalence of explicit AI-generated images of Taylor Swift is horrifying. And sadly, it happens every day to women everywhere.” Yvette Clark, another Democrat. is one of the co-authors of the pending deepfake liability law. Said: “What happened to Taylor Swift is nothing new…This is an issue on both sides, and even Swift should be able to come together to solve it.”