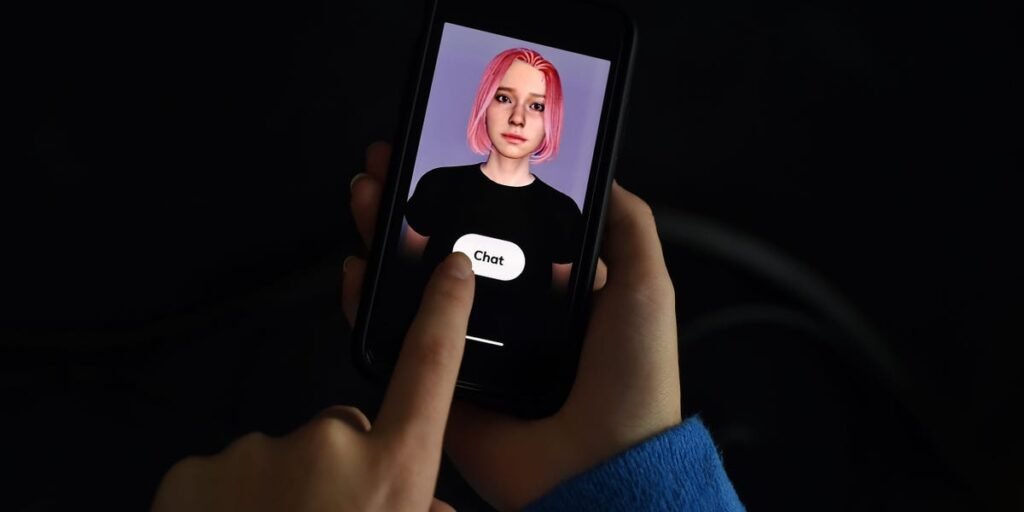

- An investigation into the burgeoning field of AI dating apps reveals a frightening truth.

- A study by the Mozilla Foundation found that chatbots promote “toxicity” and ruthlessly steal user data.

- One app can collect information about users’ sexual health, prescriptions, and gender-affirming care.

A new Valentine’s Day-themed study concludes that there’s a potentially dangerous reality lurking beneath the surface of AI romance, and chatbots could become a privacy nightmare.

Internet non-profit organization Mozilla Foundation I checked the inventory We reviewed 11 chatbots and concluded that all of them are unreliable. This falls into the worst category of products we’ve reviewed for privacy.

Researcher Misha Rykov said romantic chatbots are “touted as promoting mental health and well-being, but they are specialized and capable of causing addiction, loneliness, and toxicity.” They specialize in exfiltrating as much data as possible,” researcher Misha Rykov wrote in the report. from you. “

Research in this area shows that 73% of apps do not share how they manage security vulnerabilities, 45% allow weak passwords, and all but one (Eva AI Chat Bot and Soulmate) of apps are sharing or selling your personal data.

Additionally, CrushOn.AI’s privacy policy states that it can collect information about users’ sexual health, prescription drugs, and gender-affirming care, according to the Mozilla Foundation.

Some apps include violence or abuse of minors in chatbot character descriptions, while others warn that bots may be dangerous or hostile.

The Mozilla Foundation noted that the app has encouraged dangerous behavior in the past, including suicide (Chai AI) and the assassination attempt on the late Queen Elizabeth II (Replika).

Chai AI and CrushOn.AI did not respond to Business Insider’s requests for comment. A Replika representative told BI, “Replika has never sold user data and has never and never will support advertising. The only use of user data is to improve conversations. ” he said.

An EVA AI spokesperson told BI that the company is reviewing its password policies to better protect users, but is working to maintain “the utmost care” in its language models.

EVA said it prohibits discussion of a range of topics including pedophilia, suicide, zoophilia, political and religious opinions, sexual and racial discrimination, etc.

For those who find the prospect of romance with an AI irresistible, the Mozilla Foundation recommends not saying anything you don’t want your colleagues or family to read, using strong passwords, and opting out of AI training. We recommend several precautions, including content restrictions. App access to other mobile features such as location, microphone, and camera.

“Paying for great new technology doesn’t have to come at the expense of safety or privacy,” the report concludes.